eRacks Systems Tech Blog

Open Source Experts Since 1999

Living in a Land of High Capacity Storage

The history of computing is littered with examples of storage capacity overstepping software’s ability to effectively make use of it (for a list of examples — and an entertaining read — check out this old article from 2000: http://www.dewassoc.com/kbase/hard_drives/hard_drive_size_barriers.htm). Today is certainly no exception. With RAID arrays in excess of 32 TB (one terrabyte is ~1000 gigabytes), one must be thorough in their research, lest they discover the hard way that the software configuration they wish to use supports only a small fraction of the available disk space. The information contained in this article was compiled in an attempt to aid others in making wise decisions when making use of high capacity storage.

There are two basic considerations one must take into account. The first is partitioning. The second is one’s choice of filesystem (which in turn is often determined at least in part by the operating system.)

Partitioning

One of the most fundamental logical units of storage, treated by most operating systems as a “disk” in its own right, is the partition. For those of us who are unaware of what a partition is or why it’s important (those who know might want to skip ahead to the next paragraph), imagine the North American continent. Though in reality it’s a single physical chunk of land, it’s separated into logical boundaries: Canada, the United States and Mexico. Without these boundaries to demarcate those areas of land available to each country, it would be much more difficult to decide which resources belong to whom. In the same vein, partitions exist to logically separate a hard disk into regions of storage designated for various purposes.

The problem here is that in the original BIOS-style scheme, one can only create partitions of up to 2 TB in size. This is because the BIOS-style partition table, which makes use of a 32-bit address space, can only keep track of up to 2^32 blocks, each typically 512 bytes in size. Multiplying these two quantities gives us the maximum 2 TB. There are two ways to approach this problem.

The first is to accept the limitation and to create many small partitions. The disadvantage, of course, is that you must spread your data out over a large area. The Logical Volume Manager (LVM) on Linux can somewhat mitigate this problem by tying everything together into a single logical disk, but even so, we can certainly do better.

The second, and in my opinion the superior choice, is to stop using traditional BIOS-style partitions and to instead make use of a relatively new standard known as GPT (GUID Partition Table). Unlike BIOS-style partition tables, a GPT uses 64-bit addresses, meaning that each partition has a maximum size of 2^64 blocks x 512-bytes per block = 8 ZB (that’s zettabytes; for comparison, 1 ZB = 1 billion TB). That’s A LOT better than 2 TB!

The tradeoff, if you choose to go the GPT route, is that not all operating systems support it. At the time of this writing, the following are known to NOT support GPT (or at least not without jumping through some hoops): FreeBSD (partial support for GPT partitions exists), OpenBSD, NetBSD (GPT filesystems are supported via dkwedges, but cannot be booted from directly), OpenSolaris (again, GPT is supported for separate data partitions, but cannot be booted from directly) Fedora Core, CentOS and RedHat Enterprise Linux and Windows XP or below (GPT only works in Windows XP x64, and only for separate data partitions). This is not an exhaustive list. By contrast, here is a list (also not exhaustive) of operating systems that do fully support GPT partitions out of the box: Debian, Ubuntu and Gentoo Linux, Windows Vista and Windows 7.

Your choice of operating system will therefore determine whether or not you can take advantage of what GPT has to offer.

Filesystem Considerations

Now that we’ve got the partitioning figured out, we’ll have to consider filesystem limitations. The rest of this article assumes that you’ve either made use of a GPT partition or that you’re on Linux and have created one large LVM volume.

You might be tempted to think, “now that I have a large partition, I just have to format it and I’m done!” Sometimes this is true, as is the case with any version of Windows that supports GPT partitions and *BSD (assuming you’ve jumped through the hoops necessary to create the partition in the first place.) If you plan to use Linux, however, you’ll need to be a little more careful, as you have a few choices available to you, not all of them supporting large volumes.

The default Linux filesystem for a long time was ext3. It does support large filesystems, but with a 4K block size, it will only address up to 16 TB of space. You can create an ext3 filesystem with an 8K block size, for a maximum size of 32 TB, but only if you’re working with an architecture that supports 8K page sizes (and unless you’re using an Itanium or an Alpha processor, you’re probably out of luck.)

More recently, ext3 has been superceded by ext4. Theoretically, ext4, with 4K blocks, supports up to 1 EB (exabyte, equal to 1 million TB). However, for now, due to limitations in the tools used to create ext4 filesystems, you’re still limited to 16 TB. Hopefully, this will be fixed in the not too distant future.

Fortunately, Linux does support filesystems that can span across large volumes. These include (but are not necessarily limited to) XFS (up to 16 EB) and JFS2 (up to 32 TB).

Conclusion

Eventually, these issues will be smoothed over, just like all the others that have surfaced throughout the history of computers. For now, however, one must take some time to plan how best to utilize large capacity volumes, as the software industry still has quite a bit of catching up to do.

eRacks Open Source Systems well understands the issues faced when dealing with so much storage, and will be more than happy to help you with your needs. Check us out at http://www.eracks.com, and call for a quote today!

Ikaria Lean Belly Juice reviews point out that this product is the best for weight loss. At last you will get the body you dreamed of so much. With just one click you can find out how to access it. Check it out and make yourself happy once and for all. What a wonderful product and one I will buy again.

I got 3 boxes for $12.50 each and the shipping was very speedy. They arrived promptly. The package was absolutely packed perfectly. You could take them outside and pick the kind you like. What a great change to a day spent training.

james August 4th, 2010

Posted In: Uncategorized

Open Source Media Center Solutions

I’ve been evaluating various open source media center applications in an effort to put together a new unit and had the opportunity to weight the relative pros and cons of each. Below, you’ll get to read about my findings and hopefully learn a little bit about what’s out there. So, without further ado, here’s a list of the packages I looked at, in order of preference.

1. Boxee

(http://www.boxee.tv/)

Boxee was my first pick. It has a slick interface, can draw from a variety of different sources such as Hulu and Youtube out of the box, makes available a plethora of plugins (called “applications”), is easy to navigate and has an interface very suited for a remote control. The biggest con for me is that, while the project itself is open source, in order to use it, you need to register for an account on their website.

2. XBMC

(http://www.xbmc.org/)

XBMC, which stands for “X-Box Media Center,” was originally designed for the X-Box and has since been made available on the PC. It sports a very polished interface, and like Boxee, is easy to navigate and makes using a remote control easy. Support for online sources such as Youtube is missing out of the box, but there are plenty of plugins to help. Unfortunately, unlike Boxee or Moovida (which is next in our list of applications), you have to go to external sources in order to find them (check out http://www.xbmczone.com/). Supposedly, it’s easy to install a plugin once you’ve downloaded it, but the directions I found online differed from how things worked with the latest version, and I ended up having to install plugins manually by unzipping them and copying the files to the right directory.

3. Moovida

(http://www.moovida.com/)

Moovida, formerly known as Elisa, is another media center option. Like Boxee and XBMC, it sports an easy to navigate interface suited to a remote control, and unlike XBMC, integrates the process of finding, installing and updating plugins a part of the application itself. The reason why I rated this one below XBMC is that there aren’t a lot of plugins available, and because the interface to XBMC is, in my opinion, slightly more polished.

4. Miro

(http://www.getmiro.com/)

(My reason for rating Miro at the bottom isn’t that Miro is a bad application. In fact, I enjoyed using it. It comes with support for many video feeds by default and does a good job of organizing media. My problem, for our purposes, is that it’s not such a great application for set top boxes. The UI is easy to use, but I don’t think it would be as friendly when hooked up to a TV with a remote control. Also, it’s difficult to add sources such as Youtube, as you have to manually add RSS feeds for the channels that interest you. Nevertheless, it’s a useful application, and I recommend giving it a try.

james August 6th, 2009

Posted In: media center, multimedia, Open Source

Tags: audio, media center, Open Source, video

The Folding@home Project

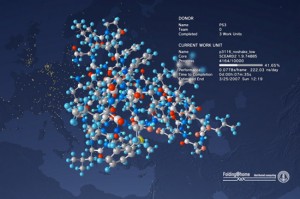

Do you have a server lying around someplace that spends a significant amount of time in idle? If so, you might want to consider running the Folding@home client. Folding@home is a project based at Stanford whose goal is to simulate and study protein folding, which is crucial in order to understand and develop better treatments for serious diseases such as Alzheimer’s.

An example of the Folding@home client on a Playstation 3

Folding@home has been one of the most successful examples of distributed computing, whereby individuals all over the world donate spare CPU cycles in order to perform calculations and send the results back to a central location. The project started back in October of 2000, and has since resulted in the publication of over 40 works, and has lead to significant progress in the fight against Alzheimer’s. To date, the Folding@home project has been and is being used to study Huntington’s Disease, cancer, Alzheimer’s and Parkinson’s, among other serious medical conditions.

The Folding@home client runs on Windows, Mac OS-X, Playstation 3 and Linux, and will run in the background while your computer completes other tasks. If you want us to install it for you, just say so in the notes field of your order. In addition, if you wish to make a donation to the project, just specify “Donation Target: Other Open Source Project” and specify Folding@home in the notes field.

For more information about Folding@home, see http://folding.standford.edu/

james June 16th, 2009

Posted In: Research

Tags: donate, fold, folding@home, protein, stanford

Learning How to Write Software for Free

Have you ever thought to yourself, “gee, it would be a lot of fun to learn how to write software,” but you didn’t want to shell out money for books or a development environment? Perhaps you’re just curious, or maybe you aspire to be a developer one day. Whatever your reason, thanks to open source software and free documentation, you can pick up the skills required with no cost to you (other than your time, of course.)

Where to Learn

Before you start writing code and playing with a compiler (a program that translates human-readable programs into instructions the computer can understand), you’ll first need to learn a programming language. You could spend anywhere between $30 to $70 on a book. Or, you could instead go online. Not only can you use Google to find countless tutorials for just about any programming language, you can also find sites that offer free e-book versions of published works (for an extensive collection of books in any subject, including quite a few on programming, check out http://www.e-booksdirectory.com/). For most of your programming needs, you’ll find that buying books really isn’t necessary.

As you grow in skill, you’ll find that learning by example is a powerful tool. Fortunately, with open source software, you have a plethora of real world applications, their source code layed bare for all the world to see (source code is the human-readable version of a program.) If you want to look at the implementation of a text editor, for example, you can check out the source code for projects like vim , nano or emacs. If you are interested to use nano text editor, see more details on this page https://www.linode.com/docs/guides/use-nano-text-editor-commands/.

Do you also want to know how various standard library functions are implemented in C, such as QuickSort? Then check out the source code to Glibc (http://www.gnu.org/software/libc/). Are you instead more interested in systems programming? Check out the kernel source trees for Linux (http://www.kernel.org) or FreeBSD (http://www.freebsd.org). You’ll find open source software for just about any need, from web browsers to mail clients, from 3D modeling to audio and video editing solutions. Whatever you want to look at, you’ll more than likely find examples written by others that can help you learn for your own projects.

Where to Get the Software

So, you already have at least some conception of what’s involved in programming, and you want to get your hands dirty by actually writing some code yourself. At the very least, you’ll need a text editor to write your code and a compiler or interpreter to run your programs. If you’re looking for a premium custom software development service to help you out with your project, check out DevsData. You may also desire a more elaborate solution, such as an IDE (integrated development environment), which offers you a one-stop solution for writing code and compiling/running your programs, all from the click of your mouse.

Either way, open source once again comes to the rescue. For C, C++ and a few other languages, you have the GNU Compiler collection (http://gcc.gnu.org/). There are also various interpreted languages, such as Ruby , Python (http://www.python.org/) or Perl If you’re looking for an IDE roughly like Microsoft Visual C++ or the like, you’ll find KDevelop , Eclipse or NetBeans , among others.

For more advanced needs, such as revision control (a means of tracking changes in software), you have applications like Subversion , Mercurial and Git (http://www.git-scm.org/).

There are many more applications for a variety of needs, so whatever you’re looking for, give Google a spin.

Conclusion

It is possible to learn how to develop software without breaking the bank. With free documentation and open source software, you have all the tools you need to learn as little or as much as you want, field service management software provided byBelfast based Workpal. Here at eRacks, we understand the needs of the developer, and can provide you with a machine pre-loaded with all the software you need to write professional programs. Contact us, and ask for a quote today!

james June 1st, 2009

Posted In: Development, Open Source

Tags: book, c, compiler, Development, eclipse, free, gcc, git, gnu, IDE, interpreter, java, kdevelop, kernel, mercurial, netbeans, Open Source, perl, programming, ruby, subversion, tutorial

Open Source Software: A Student’s Dream Come True

If you’re a student like I am, you know how important it is to save money. Some students are too busy with their studies to work at all, and those who can are usually only able to do so part-time. And, like books and tuition, software is a significant source of financial burden to the average student. While it’s true that student licensed versions of software are significantly discounted, popular titles such as Microsoft Office will still cost you somewhere in the ballpark of $130. And of course, that’s only if you don’t intend to use the software for anything other than your academic or personal endeavours. If you utilize the same applications on the job, you’ll find that you’re no longer eligible for student licenses, and suddenly you’ll discover that $130 magically turns into $300.

Fortunately, the current digital climate is rife with free software alternatives, which have the potential to save students (or parents!) hundreds of dollars.

The Operating System

Let’s start with the most fundamental bundle of software, the operating system (hereby abbreviated as OS.) The OS is what sits between the hardware and the user’s applications. Some examples are Microsoft Windows and Mac OS X.

For many students, purchasing an OS will be a non-issue, as most computers come with one pre-installed. For those in this category, most of the software mentioned below will run on both Windows and Mac. That being said, there are also a significant number of people who need to include an OS in their financial plans. Perhaps you purchased your computer used and without software. Or, maybe the OS on your machine is old and needs to be upgraded. You could have even assembled your own computer, as many hobbyists do.

It’s true that students can purchase Microsoft Windows at a discount of 30-60% off, but why would you do that when you can get your OS for free? Over the last few years, a veritable cornicopia of easy-to-use free software-based OSes have emerged, the most popular, and in my opinion, the easiest to install and use, being Ubuntu (http://www.ubuntu.com/). For the more technically inclined and perpetually curious, there are a slew of other Linux distributions, as well as the *BSD family of OSes — FreeBSD (http://www.freebsd.org/), NetBSD (http://www.netbsd.org/), OpenBSD (http://www.openbsd.org), PC BSD (http://www.pcbsd.org/) and Dragonfly BSD (http://www.dragonflybsd.org) — and Sun’s OpenSolaris (http://www.opensolaris.org/).

In reality, we do still live in a Windows world, so you may find yourself in a position where you have to use a program that only runs on Windows. Luckily, there’s a very mature and very complete open source implementation of the Windows API that’s been actively developed since 1993 called WINE (http://www.winehq.org/) You simply install WINE through the point-and-click interface provided by your OS and install your Windows applications on top of it. Many will run out of the box, and others will run with a minimal amount of tweaking.

Office Productivity

As mentioned earlier, a student copy of Microsoft Office will cost roughly $130, and in some cases, students won’t even qualify for the student license, making the product much more expensive. So then, simply by installing a single free software replacement, you’ve literally saved hundreds. There’s a fantastic open source alternative called OpenOffice (http://www.openoffice.org/), a spin-off from Sun Microsystems, Inc. The download is a little large (over 100MB), but the price tag is worth it (it’s free), and OpenOffice really is a solid application capable of doing anything Office can. It includes components that replace Word, Excel, Powerpoint and Access, as well as additional components for drawing and for editing HTML documents.

In addition, you’ll find Scribus (http://www.scribus.net/) for desktop publishing and the creation of professional quality PDFs and Dia (http://live.gnome.org/Dia) for drawing diagrams, roughly like Microsoft Visio.

Multimedia

Of course, no college-ready system is complete without the ability to play movies and music! Fortunately, open source has you covered there as well. With Totem (http://projects.gnome.org/totem/) and Xine (http://www.xine-project.org/), playing your videos on Linux is a snap (Windows and Mac users of course have their own respective built-in players and don’t have to worry about this.) As well, there are applications like Banshee (http://www.banshee-project.org/) that do a great job of managing your music (it also plays videos.)

You’ll also more than likely be managing a great deal of pictures. For editing them, you’ll find the GIMP (http://www.gimp.org/), which is very similiar to Adobe’s Photoshop, and for browsing and managing your pictures there’s F-Spot (http://f-spot.org/).

You’ll only run into a couple of hitches when dealing with multimedia on an open source OS. The first is that you won’t be able to play many Windows Media files. Fortunately, this can remedied by purchasing the Fluendo Windows Media Playback Bundle (http://www.fluendo.com/shop/product/windows-media-playback-bundle/). True, it’s not free, but for $20 it’s a small price to pay compared to all the hundreds of dollars you’ll be saving on everything else, and if you can live without Windows Media, you can save yourself the expense. The second is that technically, according to the controversial Digital Millenium Copyright Act (http://www.copyright.gov/legislation/dmca.pdf), you’re in a legal predicament if you install software to decrypt your DVDs. More than likely nobody’s going to care, and the software to do so is readily available and in common widespread use, but if you choose to play your DVDs on an open source OS you should first take the time to thoroughly understand where you stand from a legal perspective. [Ed. note: there are fully licensed DVD players available for Linux, but even so, legal scholars now feel that this area of the DMCA has not yet been fully tested in court, but recent precendents suggest that if it were, in the end, that Fair Use doctrine would win out in the end over the DMCA – Ed.]

A Plethora of Other Goodies

Depending on your field of study, you’ll find many other professional-quality free and open source applications that are outside the scope of this blog that will save you even more money. Just google around. You’ll find all sorts of amazing applications, all of them free.

Conclusion

Fellow students, let loose the shackles of expensive proprietary software and embrace the freedom of open source. Not only will you save hundreds of dollars, you’ll be drawn into a community of users and developers that are passionate about writing and supporting software. Once you get used to using free software alternatives like these one from https://www.sodapdf.com/pdf-editor/, you’ll wonder how you ever got by without it.

Here at eRacks, we specialize in providing users of all kinds with open source solutions to meet their needs. So contact us today, and ask us how we can help you save money and get even more out of your academic experience!

james April 20th, 2009

Posted In: How-To, multimedia, Open Source, Reviews, ubuntu

Tags: FLOSS, Open Office, recession-proof, Review, unix